The CoALA Lab

Co-Augmentation, Co-Learning, & Collaborative AI

Research areas

Augmenting human intelligence

How can we design technologies that augment human cognitive abilities — bringing out the best of human expertise to address complex real-world challenges?

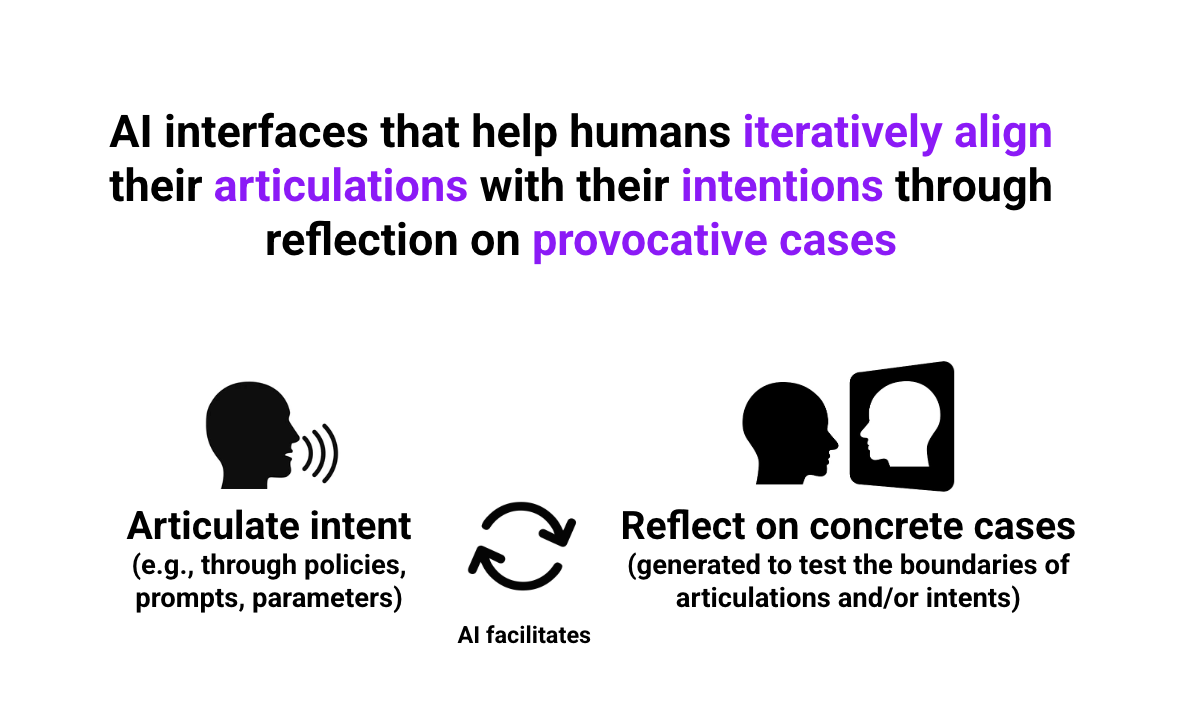

Participatory and expertise-driven AI

How can we scaffold more collaborative and participatory approaches to AI development — integrating diverse human expertise (e.g., domain, lived, and technical expertise) across the lifecycle from ideation to problem formulation to design & evaluation?

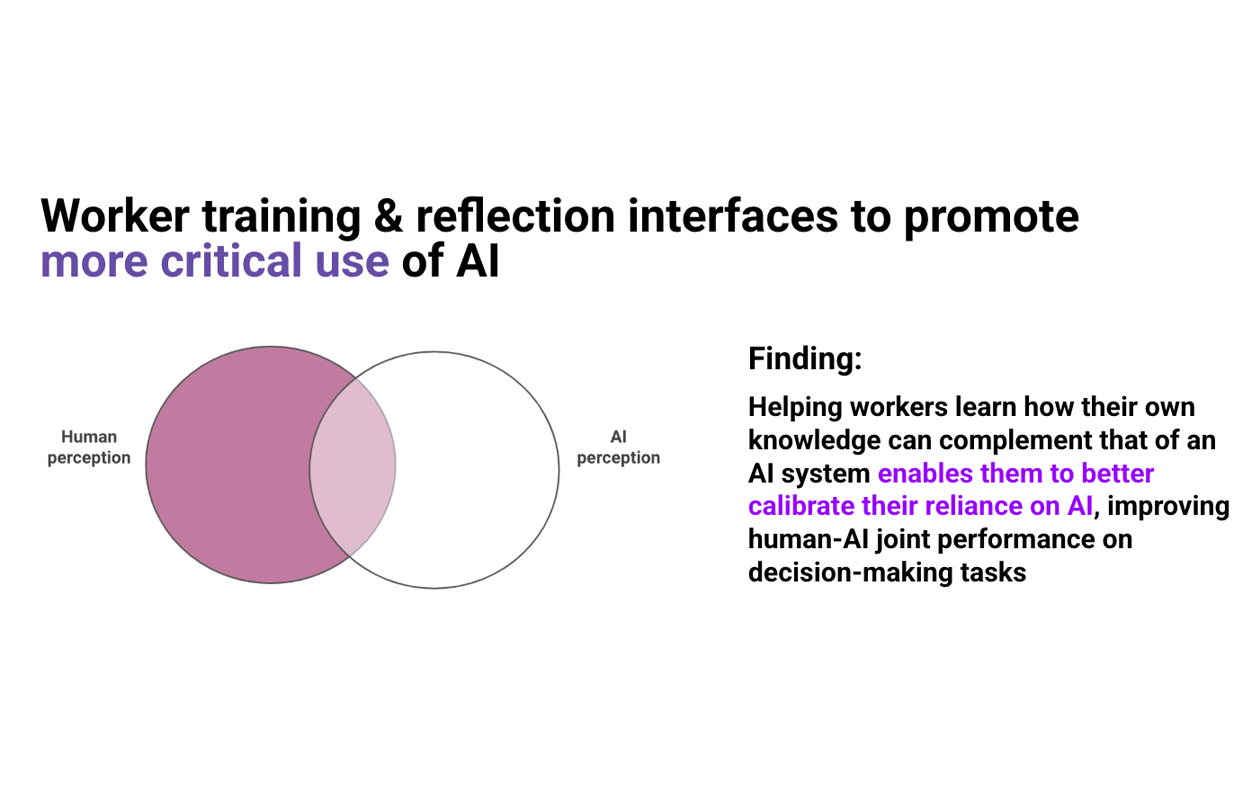

Designing for complementarity

How might we design systems to combine complementary strengths of humans and AI systems — elevating human expertise and on-the-ground knowledge rather than diminishing it?

Values

Taking an iterative, de-risking approach to research. At each stage of a project, we identify the quickest research methods (e.g., interviews, observations, lo-fi prototypes, or minimal deployments) to get the insights we need to resolve the largest uncertainties. We regularly reflect and re-plan together.

Giving credit where it is due. We credit each other and other researchers/designers appropriately! For instance, this set of principles borrows from Glassman Lab @ Harvard SEAS and from the OH Lab @ CMU. This website’s header image and lab logo were designed by Natalia Oskiera and Mary Beth Kery, respectively!

Understanding the broader contexts in which we work, and addressing meaningful problems. Many of the issues we study in this lab (e.g., in human-AI interaction, futures of work, and algorithmic justice & fairness) are tied to deep-rooted systemic, societal problems. At times, our expertise in HCI will be best suited towards making meaningful progress on relatively small pieces of these problems. However, we will frequently reflect on how we might more effectively direct our efforts, through new interdisciplinary collaborations, research-practice and community partnerships, or problem reformulation.

Taking care of ourselves and each other. We prioritize getting enough sleep and attending to our mental and physical health, so that we can bring our best selves to our work (see “Lab Counterculture” by Jess Hammer, Alexandra To, & Erica P. Cruz). We also actively work to support each other, and lift each other up! …And even if not everyone is the best of friends, we are civil to each other, and show each other respect as colleagues.

Making tacit knowledge visible. We believe that each generation of researchers and designers should work to ensure that the next generation faces fewer unnecessary hurdles than they themselves did. With fewer hurdles in their way, more people will have more opportunities to advance our fields. In line with this belief, we value sharing tacit knowledge with newcomers to the lab, and shining a light on “hidden curricula”.

Supporting students’ goals, whatever those may be. We recognize that students at all levels may have a wide range of career goals. We support each other’s goals and help each other reach them!

Reflecting on our personal and community practices. This set of values will evolve over time. Every lab member can contribute to shaping the kind of lab they want to be a part of, and we will work together to make it happen!

To learn more, check out the CoALA Lab PhD Handbook!

People

Current CoALAs

Ken Holstein

Lab Director

Anna Kawakami

PhD Researcher

(HCI + Human-AI Interaction + Policy)

co-advisor: Haiyi Zhu

Frederic Gmeiner

PhD Researcher

(HCI + Design + Human-AI Interaction)

co-advisor: Nik Martelaro

Luke Guerdan

PhD Researcher

(ML + HCI + Human-AI Interaction)

co-advisor: Steven Wu

Tzu-Sheng Kuo

PhD Researcher

(HCI + Human-AI Interaction + Social Computing)

co-advisor: Haiyi Zhu

Wesley Deng

PhD Researcher

(HCI + Human-AI Interaction)

co-advisor: Motahhare Eslami

Alicia DeVrio

PhD Researcher

(HCI + Critical Algorithm Studies)

Julia Chadwick

Administrative Coordinator

Alums

PhD & Postdoctoral

Charvi Rastogi

→ Research Scientist at Google DeepMind

Devansh Saxena

→ Asst. Professor of Data & Information Sciences at University of Wisconsin-Madison

LuEttaMae Lawrence

→ Asst. Professor of Instructional Technologies & Learning Sciences at Utah State University

Alex Ahmed

→ Developer at Sassafras Tech Collective

Masters

Free S. Bàssïbét

Chance Castaneda

Monica Chang

Yu-Jan Chang

Connie Chau

Yang Cheng

Erica Chiang

David Contreras

Aditi Dhabalia

Harnoor Dhingra

Yvonne Fang

Bill Guo

Anushri Gupta

Madeleine Hagar

Howard (Ziyu) Han

Gena Hong

Alison Hu

Meijie Hu

Karen Kim

Ankita Kundu

Janice Lyu

Ahana Mukhopadhyay

Yunmin Oh

Matthew Ok

Will Page

Lauren Park

Diana Qing

Will Rutter

Harkiran Kaur Saluja

Andrew Sim

Anita Sun

Sophia Timko

Sonia Wu

Shixian Xie

Linda Xue

Yuchen Yao

Jieyu Zhou

Undergraduate & Postbacc

Erica Chiang

Zirui Cheng

Jamie Conlin

Jiwoo Kim

Sophia Liu

Kaitao Luo

Vianna Seifi

Donghoon Shin

Elena Swecker

Eric Tang

Mera Tegene

Lakshmi Tumati

Mahika Varma

Ming (Yi-Hao) Wang

Sonia Wu

Candace Williams

Zac Yu

Justin Zhang

Highlighted work

Botender: Supporting communities in collaboratively designing AI agents through case-based provocations [CHI’26]

Kuo, T. S., Liu. S., Chen, Q. Z., Seering, J., Zhang, A. X., Zhu, H.**, Holstein, K.**

Policy maps: Tools for guiding the unbounded space of LLM behaviors [UIST’25]

Lam, M. S., Hohman, F., Moritz, D., Bigham, J. P., Holstein, K.**, Kery, M. B.**

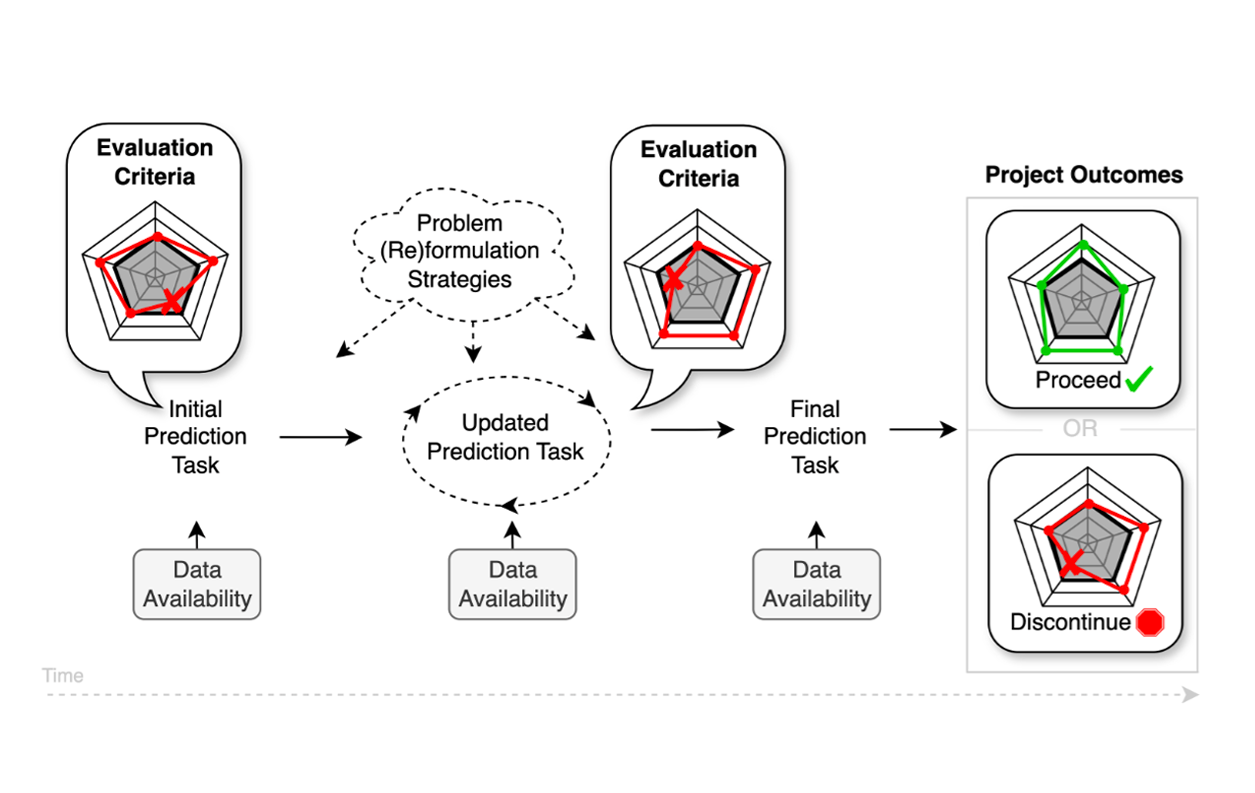

Ground(less) truth: A causal framework for proxy labels in human-algorithm decision-making [FAccT’23]

Guerdan, L., Coston., A., Wu, Z. S., & Holstein, K.

PolicyCraft: Supporting collaborative and participatory policy design through case-grounded deliberation [CHI ‘25]

Kuo, T. S., Chen, Q. Z., Zhang, A. X., Hsieh, J., Zhu, H.**, & Holstein, K.**

Understanding frontline workers’ and unhoused individuals’ perspectives on AI used in homeless services [CHI’23]

Best Paper Award

Kuo, T.*, Shen, H.*, Geum, J. S., Jones, N., Hong, J. I., Zhu, H.**, Holstein, K.**

Student learning benefits of a mixed-reality teacher awareness tool in AI-enhanced classrooms [AIED’18]

Best Paper Award

Holstein, K., McLaren, B. M., & Aleven, V.

(also see our follow-up article: AI Magazine ’22)

Human-AI partnerships in child welfare: Understanding worker practices, challenges, and desires for algorithmic decision support [CHI’22]

Best Paper Honorable Mention Award

Kawakami, A., Sivaraman, V., Cheng, H., Stapleton, L., Cheng, Y., Qing, D., Perer, A., Wu, Z. S., Zhu, H., & Holstein, K.

Improving human-machine augmentation via metaknowledge of unobservables [SSRN’26]

De-Arteaga, M.** & Holstein, K.**

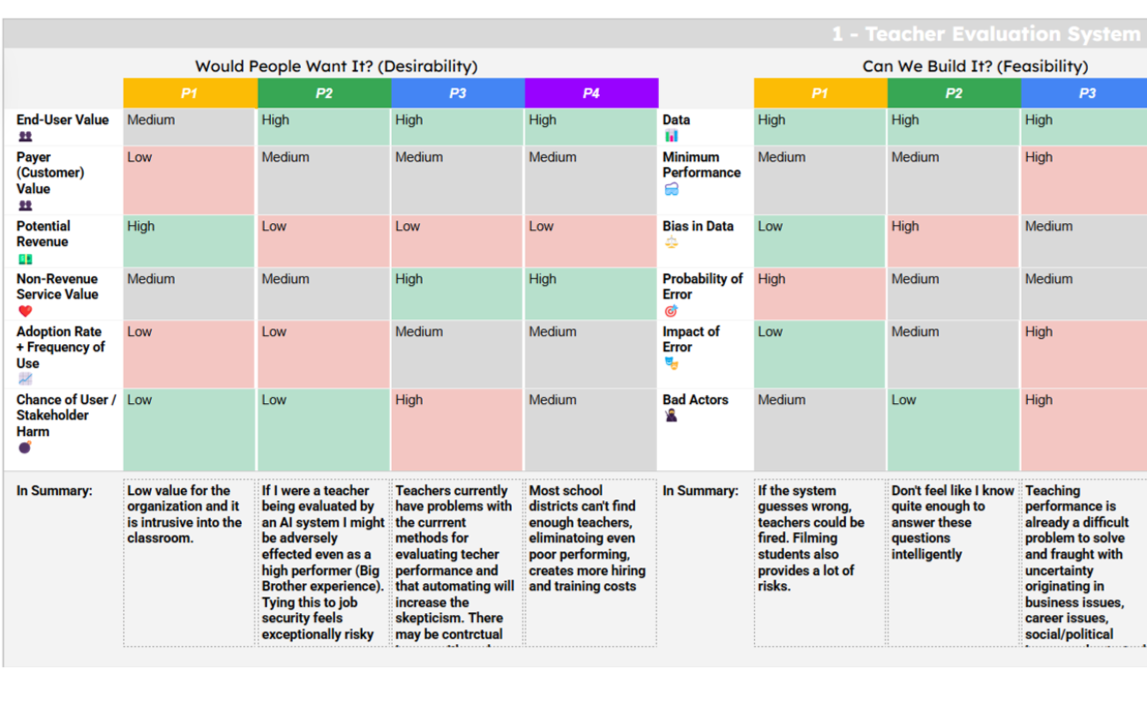

Making the right thing: Bridging HCI and responsible AI in early-stage AI concept selection [DIS’25]

Best Paper Honorable Mention Award

Jung, J. Y.*, Saxena, D.*, Park, M., Kim, J., Forlizzi, J., Holstein, K., Zimmerman, J.

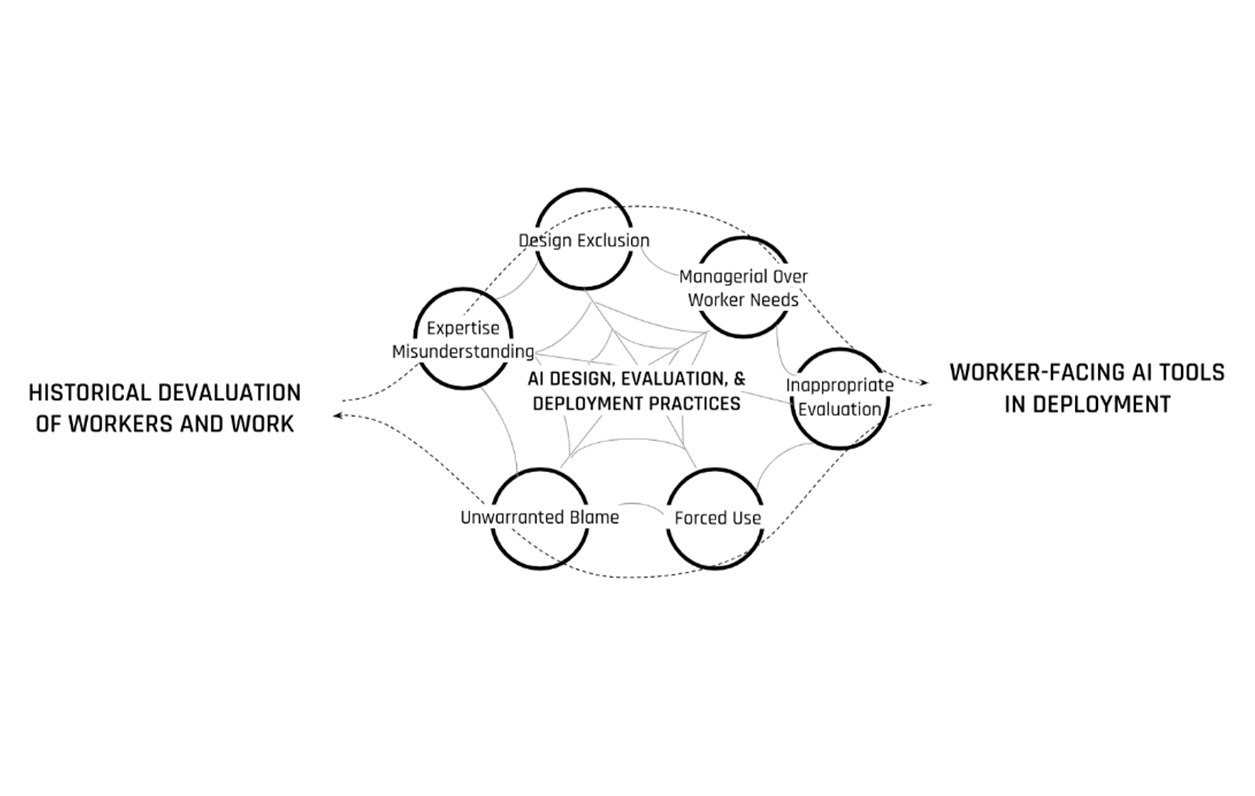

AI failure loops in devalued work: The confluence of overconfidence in AI & underconfidence in worker expertise [BD&S’26]

Kawakami, A., Taylor, J., Fox, S., Zhu, H., & Holstein, K.

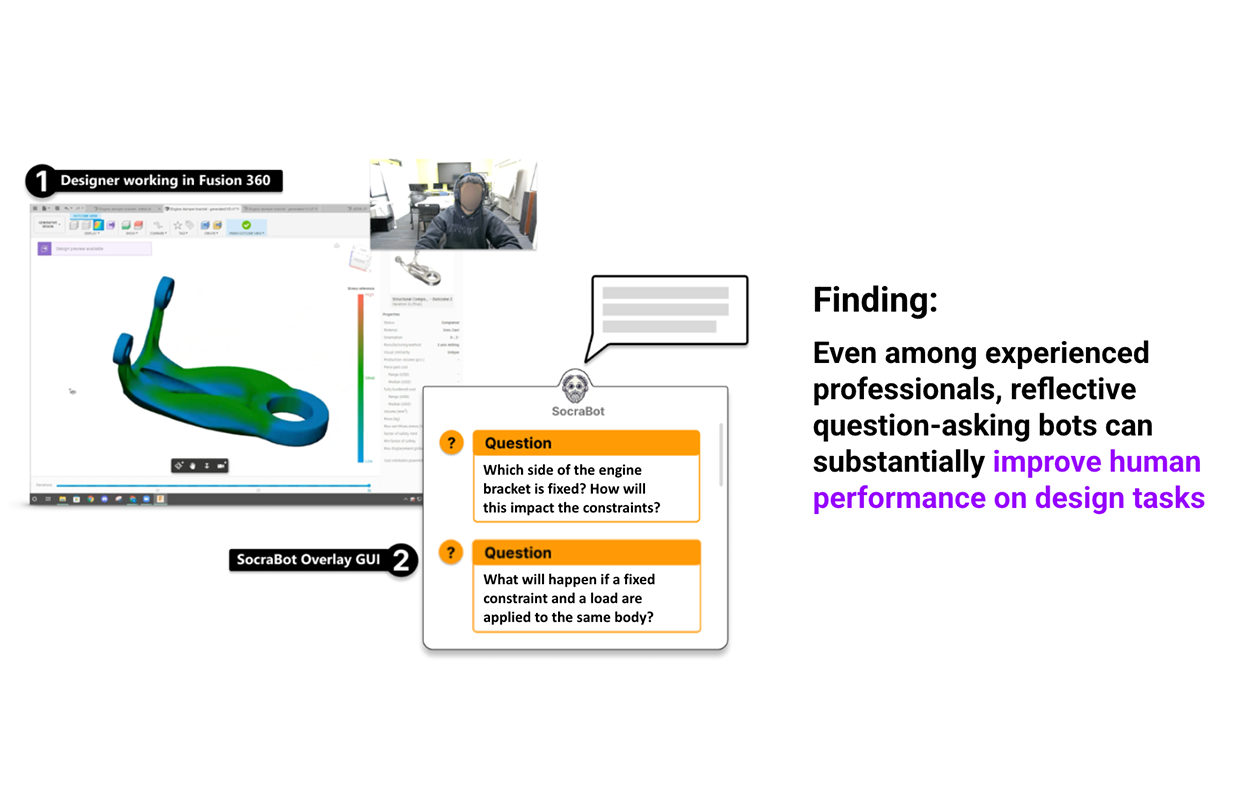

Exploring challenges and opportunities to support designers in learning to co-create with AI-based manufacturing design tools [CHI’23]

Best Paper Honorable Mention Award

Gmeiner, F., Yang, H., Yao, L., Holstein, K., Martelaro, N.

Wikibench: Community-driven data curation for AI evaluation on Wikipedia [CHI’24]

Kuo, T., Halfaker, A. L., Cheng, Z., Kim, J., Wu, M., Wu, T., Holstein, K.**, & Zhu, H.**

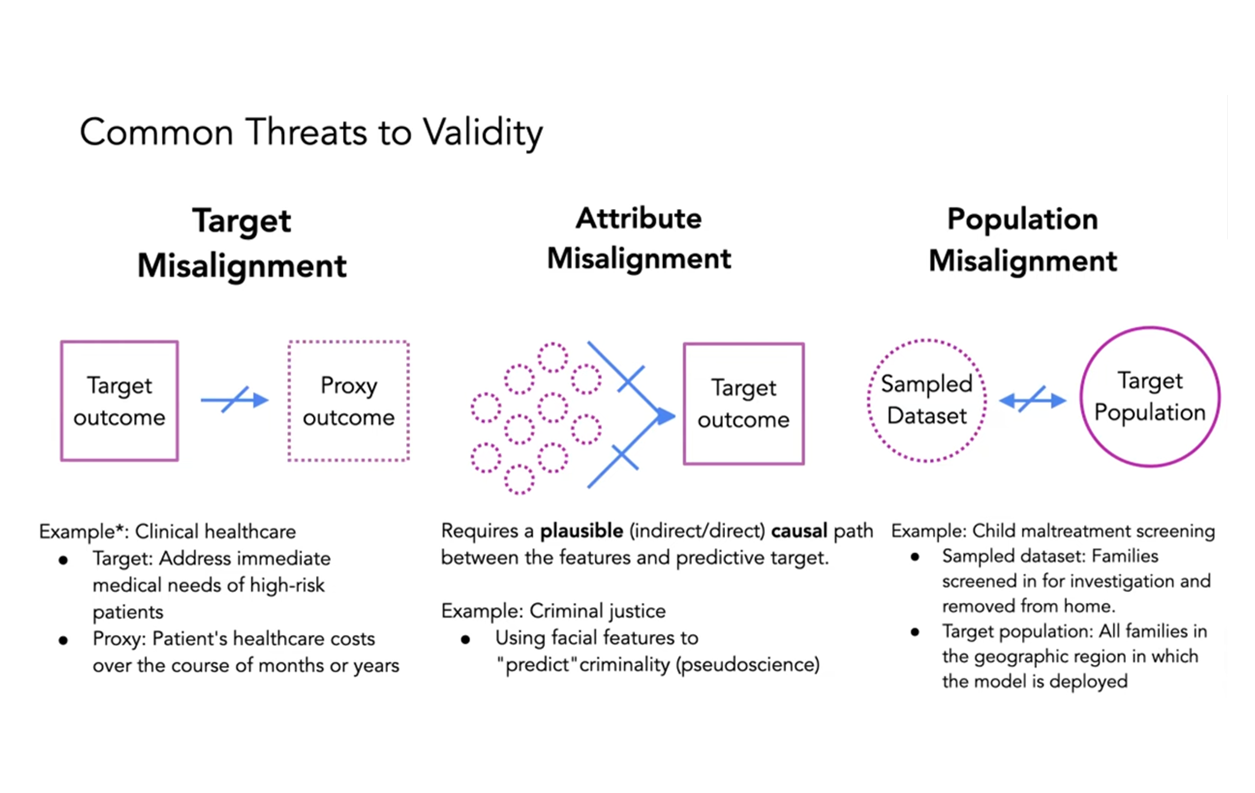

A validity perspective on evaluating the justified use of data-driven decision-making algorithms [SaTML’23]

Best Paper Award

Coston, A., Kawakami, A., Zhu, H., Holstein, K., & Heidari, H.

Measurement as bricolage: Examining how data scientists construct target variables for predictive modeling tasks [CSCW’25]

Guerdan, L.*, Saxena, D.*, Chancellor, S.**, Wu, S. Z.**, Holstein, K.**

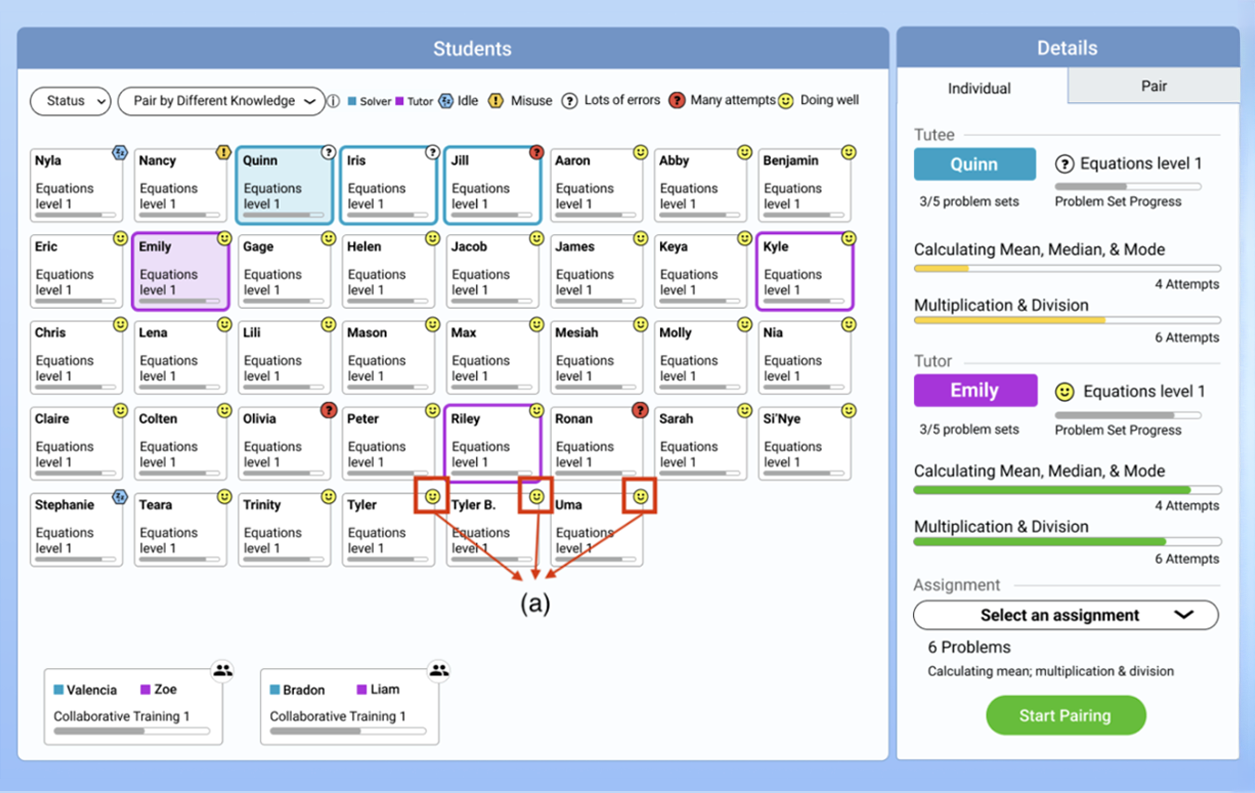

Pair-Up: Human-AI co-orchestration of dynamic transitions between individual and collaborative learning in the classroom [CHI’23]

Yang, K., Echeverria, V., Lu, Z., Mao, H., Holstein, K., Rummel, N., Aleven, V.

Improving fairness in machine learning systems: What do industry practitioners need? [CHI’19]

Holstein, K., Wortman Vaughan, J., Daumé III, H., Dudik, M., & Wallach, H.

Everyday algorithm auditing: Understanding the power of everyday users in surfacing harmful algorithmic behaviors [CSCW’21]

Shen, H.*, DeVos, A.*, Eslami, M.**, & Holstein, K**

The Situate AI Guidebook: Co-designing a toolkit to support multi-stakeholder, early-stage deliberations around public sector AI proposals [CHI’24]

Kawakami, A., Coston, A., Zhu, H.**, Heidari, H.**, & Holstein, K.**

Courses

Interested in working with us?

If you are interested in collaborating or joining the group, please get in touch!